AnyClip’s Visual Intelligence™ Platform is revolutionizing how business does video.

We power advanced video products so smart, they’re Genius.

OUR PRODUCTS

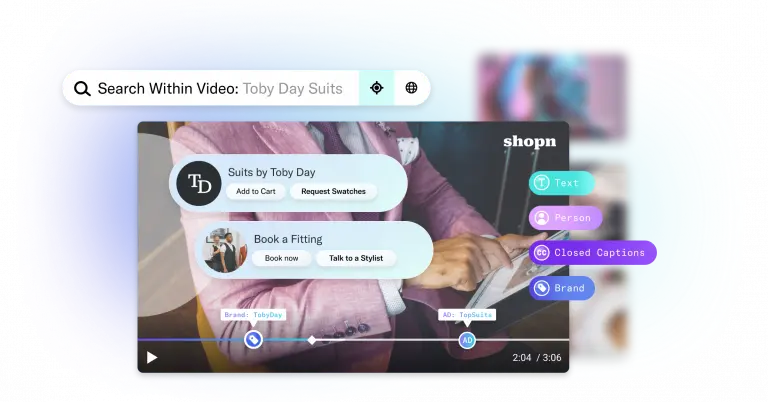

The AI-powered video management platform for customer-facing communications that converts traditional video into intelligent content that is fully enabled—searchable, measurable, personalized, merchandised and interactive.

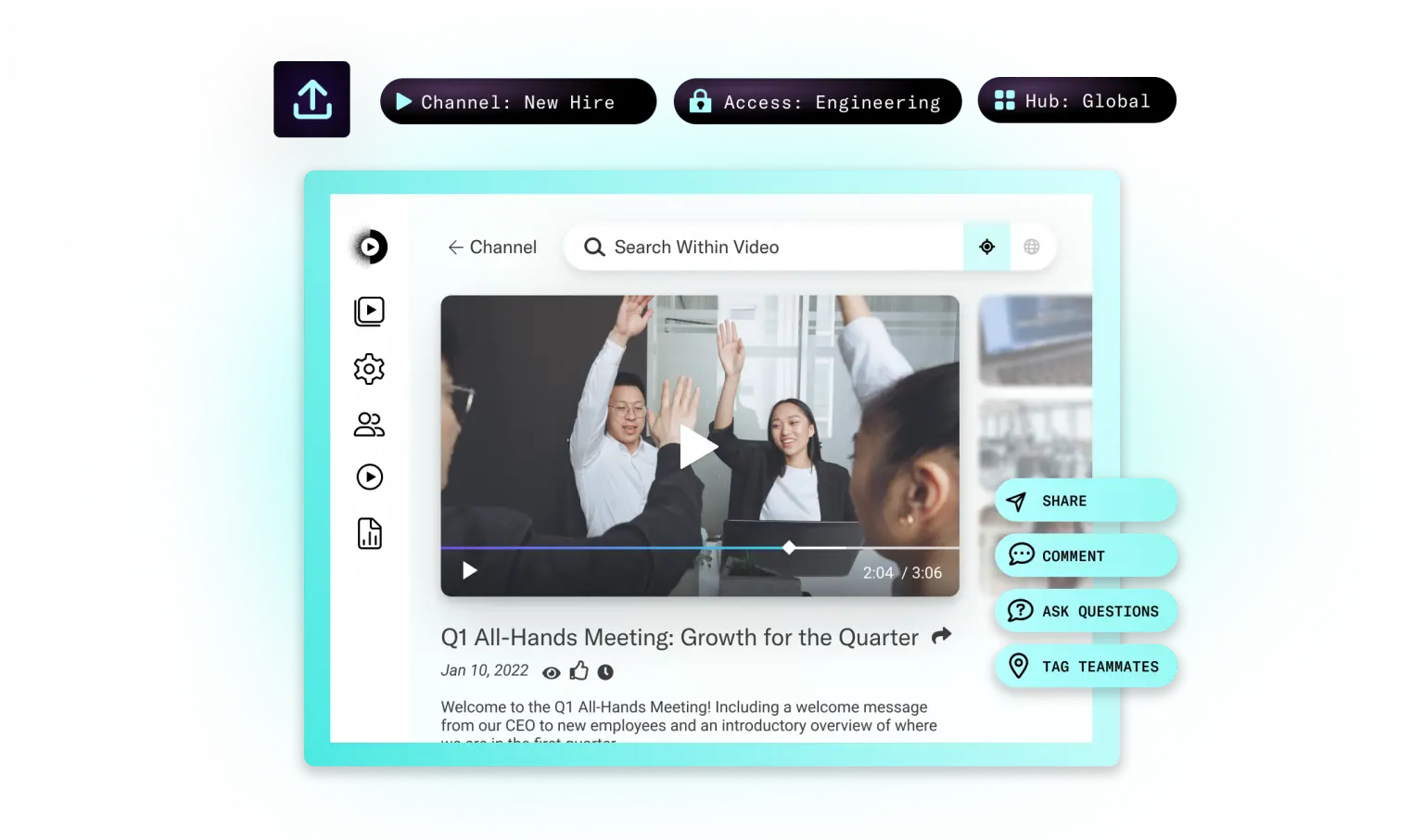

The AI-powered internal communications platform for

knowledge sharing and collaboration.

The AI-powered data enhancement and machine learning models that, when added to your workflows, turn traditional video into fully enabled and data-enriched, smart video.

WHAT IS VISUAL INTELLIGENCE?

By instantly activating the innate data in video with AI,

the power once reserved for text—transparency, interactivity and collaboration—is now available for the most desired and prevalent form of communication: video.

Instantly and automatically analyze every video frame-by-frame by brand, object, people, spoken word, text, content category and brand safety.

Drive connectivity, productivity, and collaboration with video.

Centralize all your video content in one place so you can automatically manage, organize, host, and distribute.

Empower your business within video—from externally-facing marketing and customer communication to secure internal video Hubs for employees.

Any Video

What our customers are saying.

”AnyClip enables that video, unlocking the intelligence within content—making it instantly accessible and actionable in real time, which is essential to our partner network, globally.

Joan MoralesHead of Partner Marketing//Zoom

”AnyClip not only offers a superior video platform for our monetization, but they give us the partnership we are looking for, so we can optimize our ad experiences to the fullest.

Lincoln GunnVP of Programmatic Revenue//Fandom

”AnyClip’s technology allows an entire search of our library with a simple keyword search.

Gina GrilloPresident and CEO//The Advertising Club of New York